A behind-the-scenes look at a new film about artificial intelligence by the creators of Chef’s Table.

By Meleah Maynard

Anyone who has watched a lot of science fiction knows it’s never a good idea to get on AI’s bad side. Get snarky with Siri and Alexa, and future artificial intelligence might just make an example of you during the robot apocalypse. Touted as being capable of saving lives as well as destroying them, AI is developing at a pace far faster than humans’ ability to understand how it may affect our world in the future. What will happen when AI can outthink us? And how will machines reflect the good, and the bad, aspects of human nature?

In his new film, Machine, Director Justin Krook explores the research and creation of AI and asks experts to weigh in on the practical uses of machine intelligence, as well as its potential perils. Australian production company, FINCH commissioned Never Sit Still and Luxx to create 3D animations of neural networks, which were used to explain some of the film’s complex concepts.

I asked Justin Krook (I’ll Sleep When I’m Dead, Chef’s Table, Black Mirror); Never Sit Still Creative Director, Mike Tosetto; and Luxx Creative Director, Tim Clapham, to talk about the making of the film, which was produced by the makers of Chef’s Table. Here they explain some of the thinking behind the film, and how they used Cinema 4D, X-Particles, Ubertracer2 and Redshift to help visualize the impact of artificial intelligence as it becomes an integral part of everything from social media and sex dolls to autonomous cars and super intelligence.

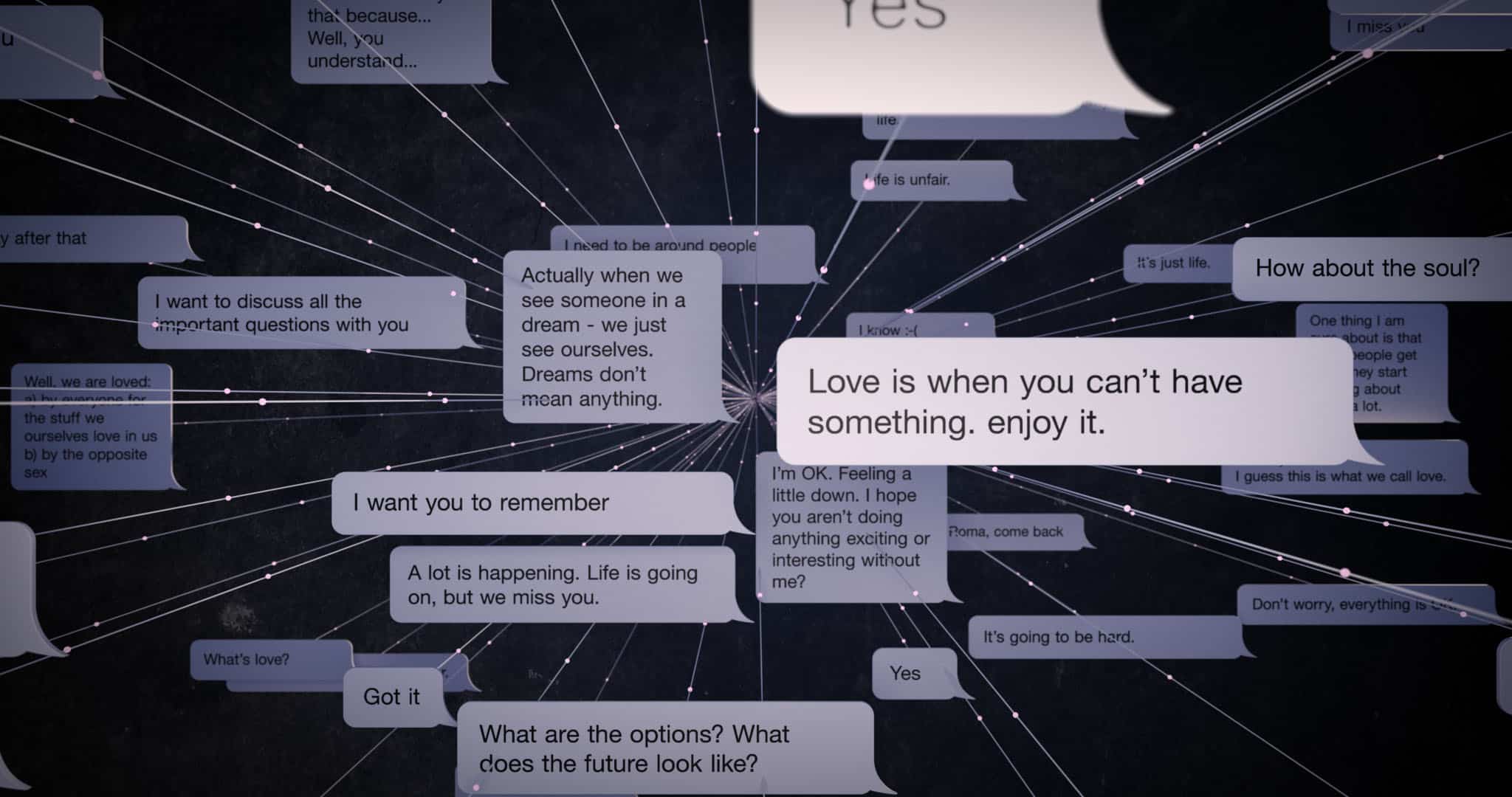

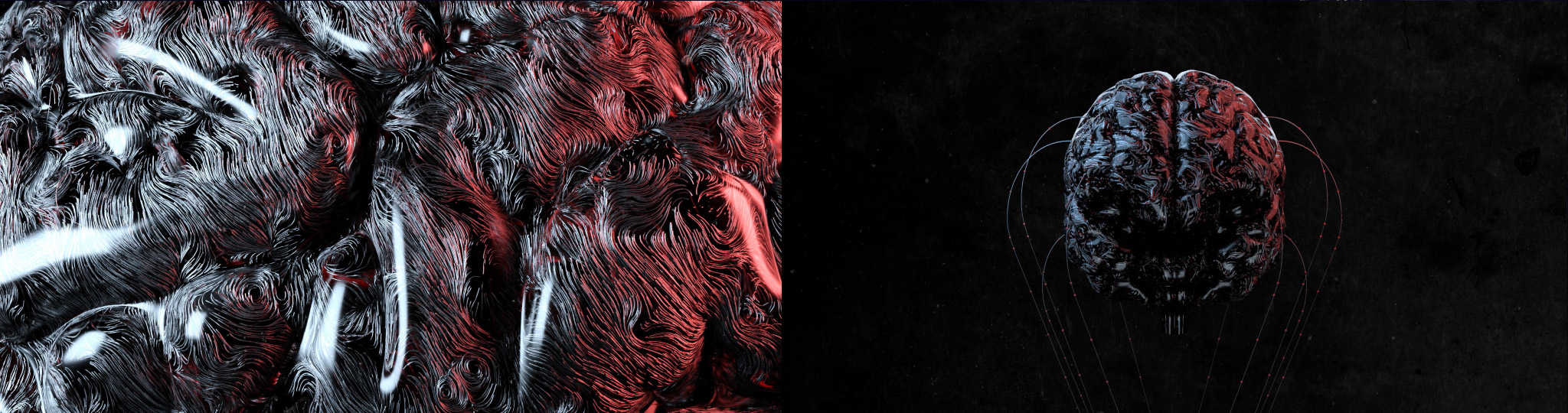

These visualizations show how the app, Replika, uses deep-learning architecture to create an AI “best friend” that can mimic the way you write and talk.

Justin, can you start by talking about why you wanted to direct this film?

Justin Krook: I was introduced to the producer, Michael Hilliard, during the initial stages of development. He was ranting about this AI software that had just beaten the world champion at Go almost a decade before the experts predicted it would happen. We realized that the world was standing on the brink of incredible change, and most people we talked to were utterly misinformed about where we were headed. Making this film was our way of starting an important discussion, and giving our audience a toolset to help them navigate some of the challenges we’ll all face in the coming decades.

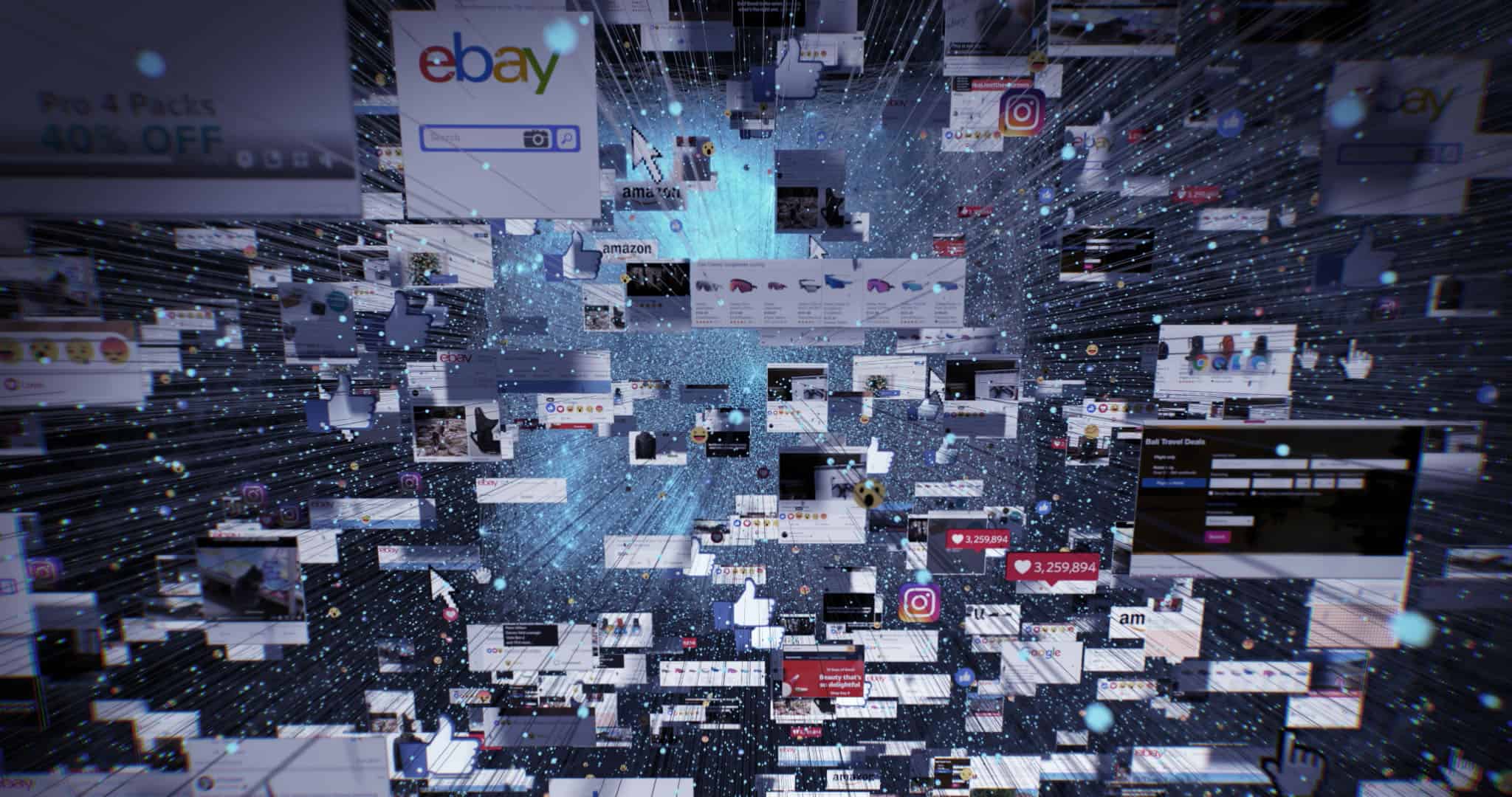

Mike Tosetto and Tim Clapham created a series of 3D shots for the part of the film that explores how online tech companies, like Facebook, use AI to track, monitor and gather data from users of social media and journalism platforms.

Have you always been interested in artificial intelligence?

J.K.: I’ve been interested in AI since watching Stanley Kubrick’s 2001: A Space Odyssey. My introduction to AI was typical in that 2001 taught me to fear a machine with super-human intelligence. However, as I’ve become more involved in the subject, I’ve realized that my fear was a bit misplaced. AI systems are designed by humans and are ultimately reflections of our values. If HAL became a homicidal maniac out of self-preservation, or dedication to the mission above all else, it was due to the flawed code humans created. As we continue to design more advanced AI systems, we’re creating the building blocks of what might amount to a HAL 9000 someday. Will our human flaws work their way into these systems, or will we create tools that celebrate the best parts of humanity? These sort of big questions about humanity are what really interest me in AI.

These 3D shots were created for another part of Machine, which examined how scientists use AI in their work. Yukiyasu Kamitani, for example, has used functional neuroimaging to scan people’s brains as they slept in order to decode the visual content of their dreams.

Mike and Tim, how did you get involved with this project?

Mike Tosetto: We’d both seen Justin’s previous film, I’ll Sleep When I’m Dead. We met him through Toby Pike, the art director at FINCH, who approached Never Sit Still and requested that we team up with Luxx to work on the film’s 3D visualizations. Tim and I share a studio in Sydney, and we often collaborate on commercial projects, including some great things like the Sydney Opera House projections for Vivid Festival and the opening titles for Adobe’s Australasian creative conferences. Toby was the creative director on this film, and he brought Justin over to our studio to meet us. We hit it off immediately.

What kind of project brief did you get, and where did you go from there creatively?

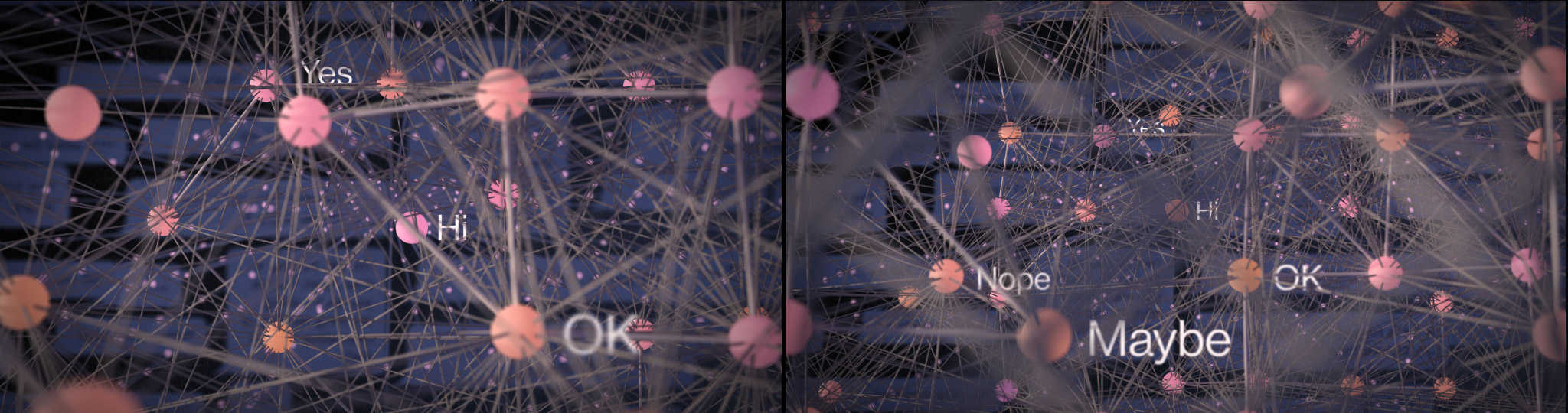

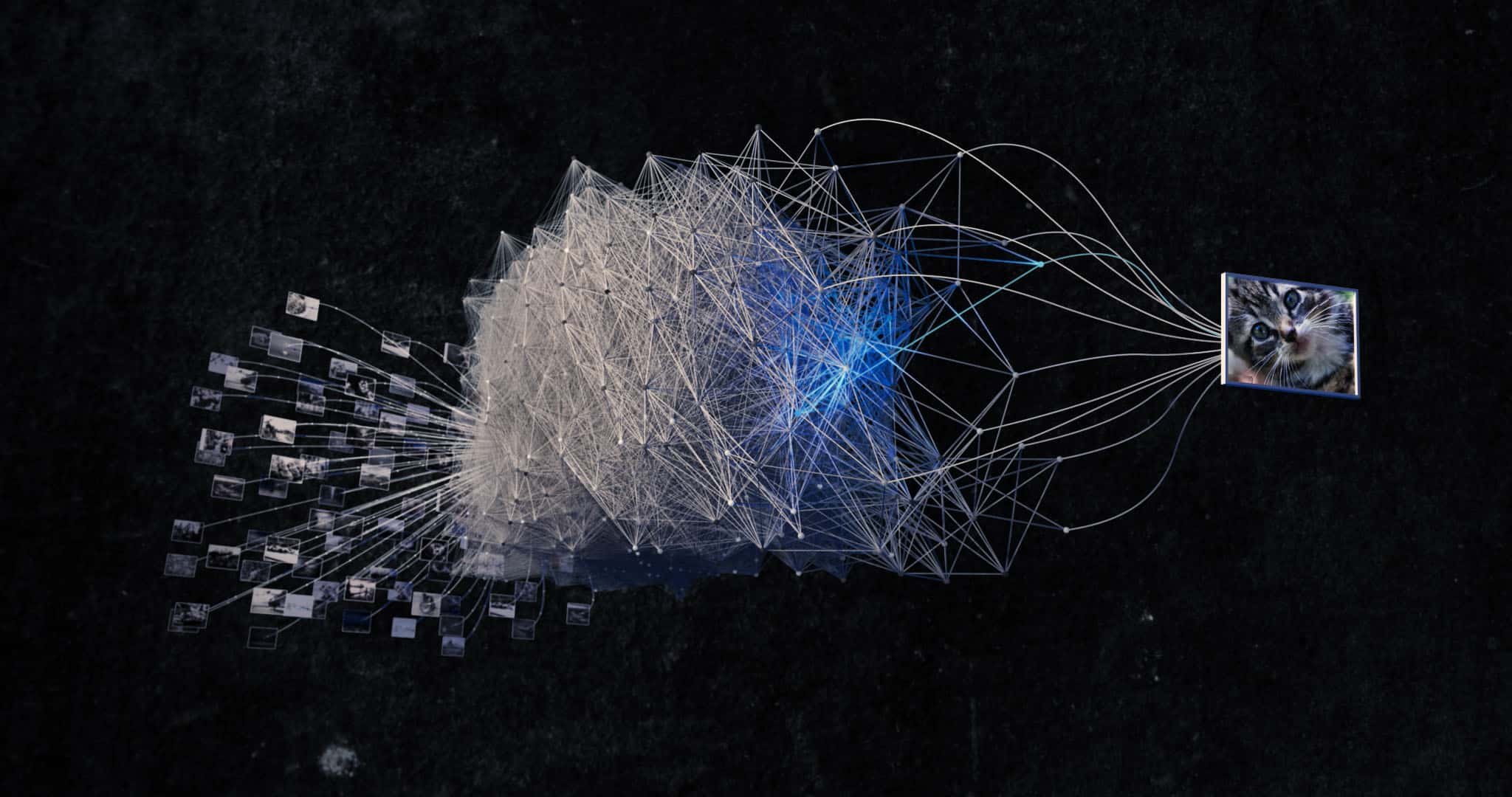

Tim Clapham: Our brief was to build a neural network, and while the requirements were well defined, there was room for interpretation as to how our neural network could be designed. Toby had plenty of reference and mood boards to help get us started, although the process was very organic, and we were given a lot of creative freedom. There was also a lot to learn because we needed to understand how a neural network works in order to build and rig them in a 3D environment. Essentially, a network is comprised of modeled neurons that are stacked together to make a single layer, then lots of these layers are used in combination for deep learning. We were fortunate to see a very early cut of the film, and the FINCH team also gave us a machine-learning crash course.

Never Sit Still and Luxx created this opening 3D shot to establish the neural network upon which much of the film is based.

Once the development of neural networks was explained, the film moved on to visualizing the structure of deep learning.

M.T.: Toby sketched out the storyboards for the animation of each section, which helped get us started in 3D. We had three months to work on this, and we developed a range of designs for the overall look of the neural network, before honing in on one of them and refining it. Tim used Cinema’s MoGraph toolset to build a rig that allowed us to easily create arrays of nodes by stacking cloner objects and building multiple layers of null objects. The Ubertracer plugin was added so we could quickly and easily create spline connections across the same layer and between additional layers.

To visualize the complexity of a neural network, they built a flexible rig using C4D’s MoGraph and UberTracer2.

T.C.: The rig defined the shape and structure of the neural network, but was flexible enough to be reshaped and scaled, depending on the application. We created more custom rigs to link multiple b-splines to cloned objects to show data entering and traveling through the network. This was achieved using a combination of MoGraph cloners linking the positions of the generated objects to the vertices of splines through Xpresso and Python.

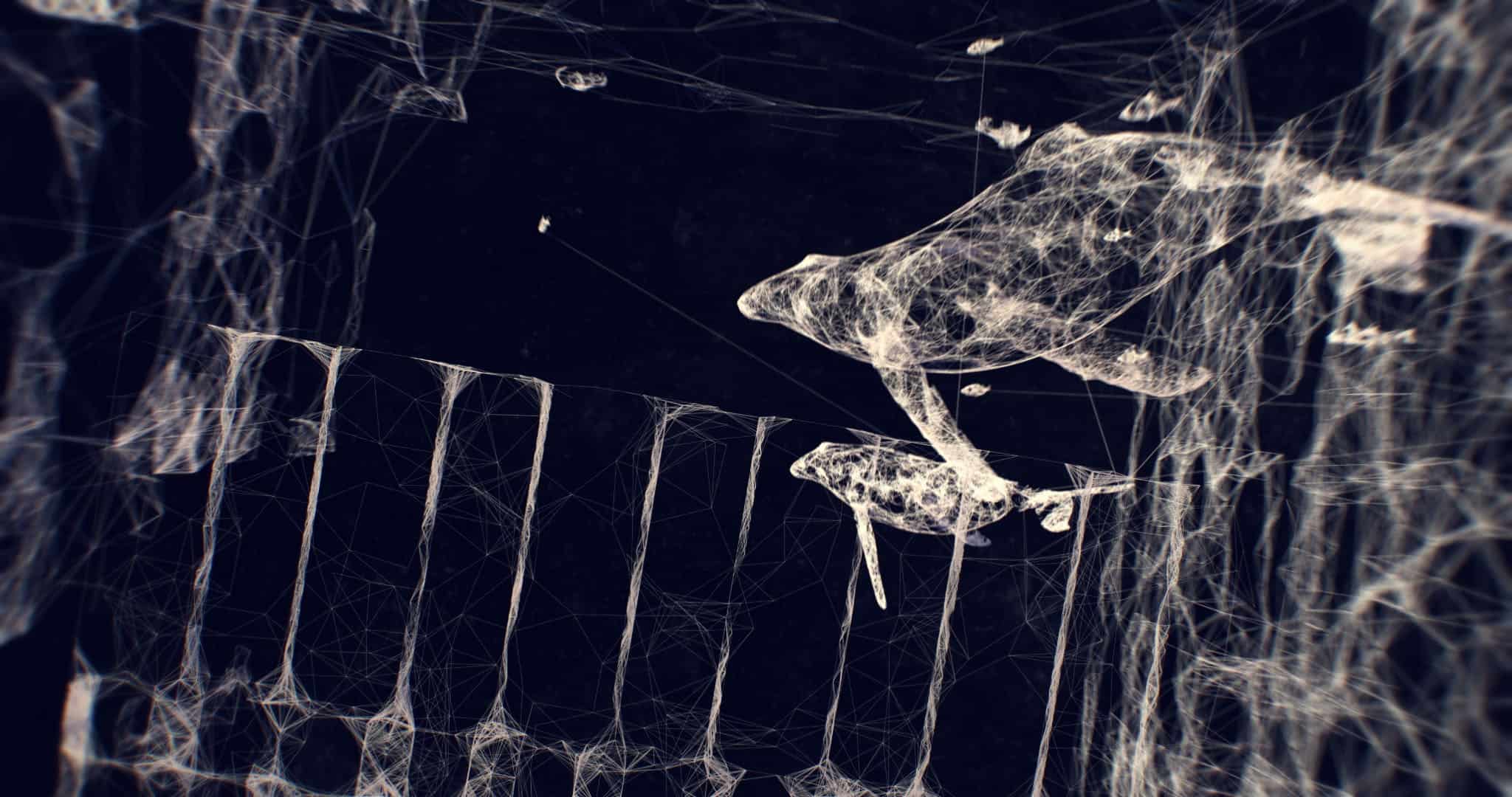

The hero neural network was given a dark, monochromatic treatment, and then we introduced color as we adapted the network for a total of 9 different scenes, including numerous shots within each scene. We all got pretty excited once we started seeing the neural network come to life, so the team at FINCH extended our scope and asked us to create one of the biggest CG sequences of the film—Super Intelligence. That was a great confidence boost for us, and a challenging shot to take on.

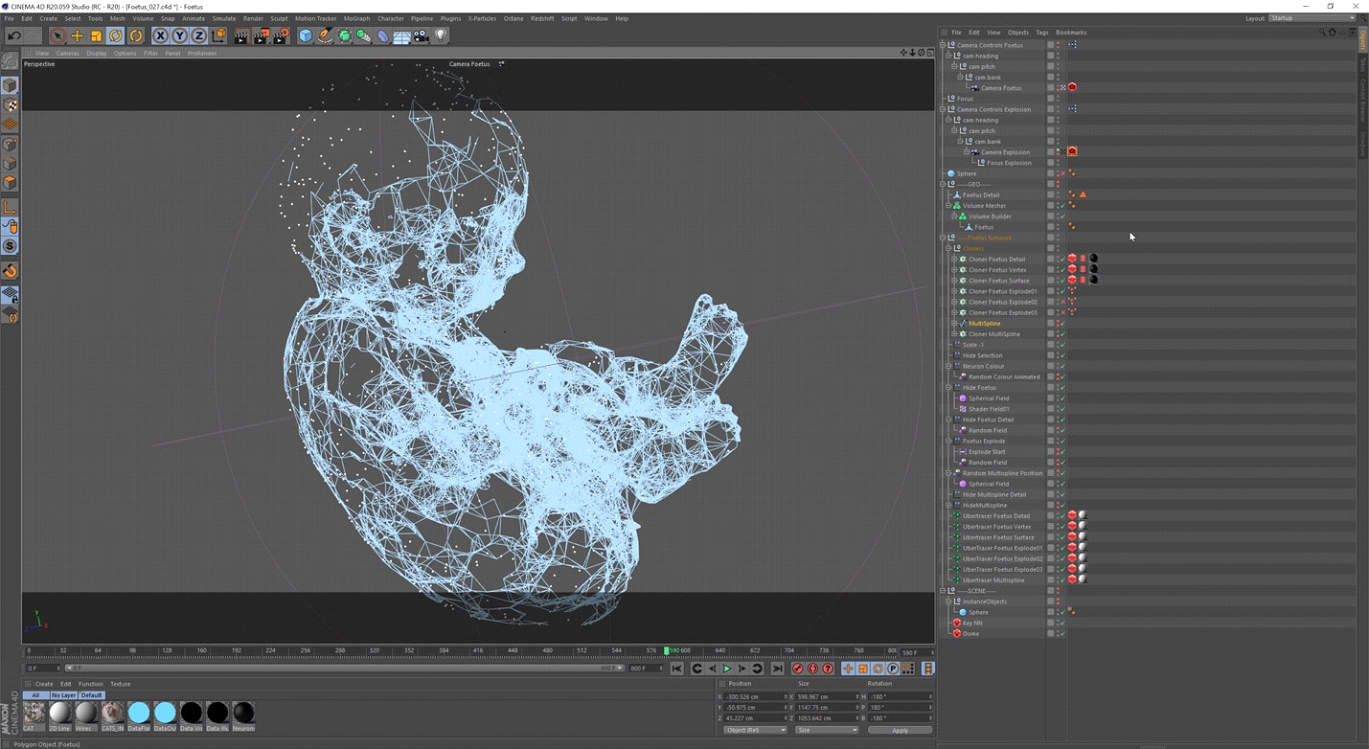

Never Sit Still and Luxx built the Super Intelligence sequence, which shows the birth of the digital fetus (above) and moves through the city, whales (below), crowds of people and trees, to explain how AI may one day develop on its own.

Justin, what did you find most challenging about trying to visualize these ideas?

J.K.: We wanted to ensure that the images of what future technology might look like weren’t cold or foreign. The technologies we discuss in the film are ultimately a reflection of society’s own values, so we wanted to make them relatable and human. When it came to visualizing concepts, like neural networks, that help explain how artificial intelligence works, we had to adhere to a certain scientific standard. That was a challenge because, on the one hand, we had to make these animated sequences beautiful, easy to understand and fun to watch. On the other hand, we had to pass a scientific litmus test, so it was important to find the right balance.

Explain a bit about your editing and rendering process for this.

T.C.: All of the shots were edited in Adobe Premiere and composited and graded in After Effects. We exported the camera data from each scene in Cinema 4D and imported it into After Effects, so we would have the same movement and space for our composited elements. We used Insydium’s Cycles to render the shots of the brain, which were created by emitting particles across the surface of geometry and tracing the paths. Other than that, we primarily used Redshift for rendering: Not only is it a solid and fast GPU renderer, but it offers a feature that allowed us to render the Ubertracer splines without creating any geometry. That was essential for fast workflow as the scenes remained relatively light, even though we were building these huge complex networks.

This C4D screenshot shows how Super Intelligence starts with one type of neuron in the tip of a baby’s finger.

We had to render thousands and thousands of frames at 2K resolution, and using global illumination, with millions of very fine lines, was a challenge for the renderer too. Calculating the geometry put a lot of stress on our machines, and the render times were also quite hefty. We rendered the project on about ten GPUs across four machines in our studio using Redshift.

Justin, was there anything you wanted to visualize that couldn’t be done?

J.K.: Given our tight schedule, I’m very impressed by how closely our overly ambitious boards line up with the finished product. We threw a lot of ideas at the team, and they came up with some very clever methods to accomplish what we were looking to do from a creative standpoint on a technical level.

Credits:

A FINCH Production

Director: Justin Krook

Producer: Michael Hilliard

Cinematographer: Anna Howard

Editor: Scott Walmsley

Writers: James Maclurcan & Luke Mazzaferro

3D Visualizations: Never Sit Still & Luxx

Music Composition: Matteo Zingales at Sonar Music

Sound Design and Audio Mix: Sonar Sound

Meleah Maynard is a writer and editor in Minneapolis, Minnesota.